[Solved] Help needed for face detection -deep learning

-

I have a code for detecting faces but now i need to count no:of faces .link text

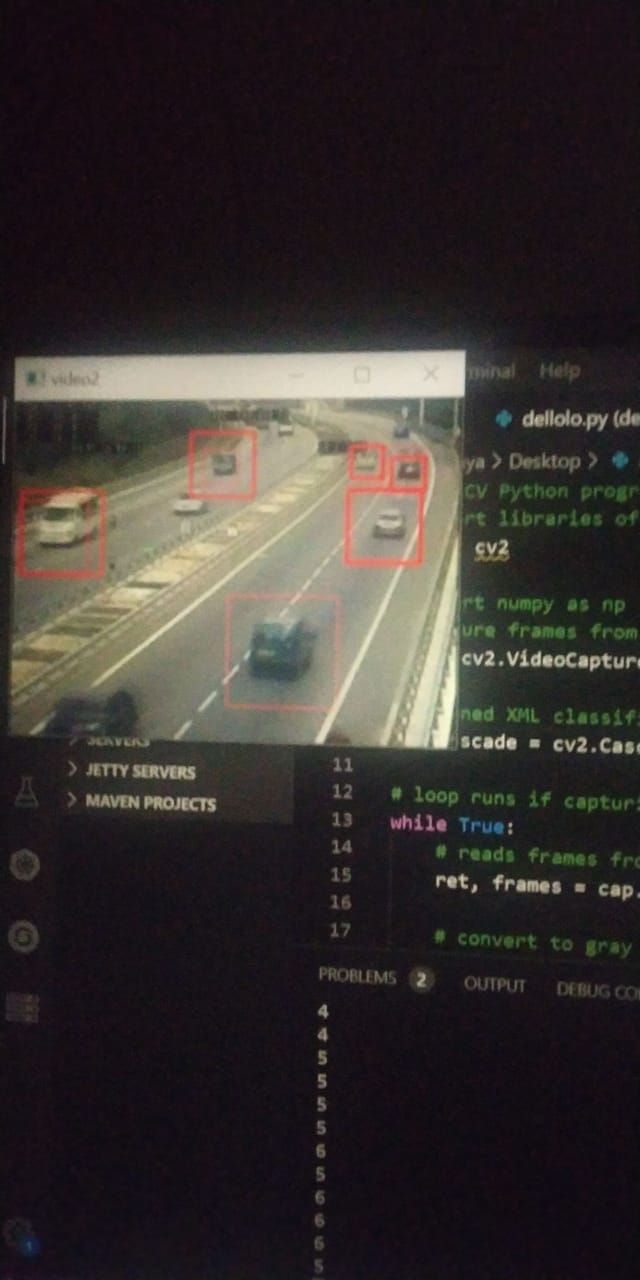

# OpenCV Python program to detect cars in video frame # import libraries of python OpenCV import cv2 #import numpy as np # capture frames from a video cap = cv2.VideoCapture('video.avi') # Trained XML classifiers describes some features of some object we want to detect car_cascade = cv2.CascadeClassifier('cars.xml') # loop runs if capturing has been initialized. while True: # reads frames from a video ret, frames = cap.read() # convert to gray scale of each frames gray = cv2.cvtColor(frames, cv2.COLOR_BGR2GRAY) #color fill white frames.fill(255) # or img[:] = 255 # Detects cars of different sizes in the input image cars = car_cascade.detectMultiScale(gray, 1.1, 1) # To draw a rectangle in each cars for (x,y,w,h) in cars: cv2.rectangle(frames,(x,y),(x+w,y+h),(0,0,0),-1) # Display frames in a window cv2.imshow('video2', frames) # Wait for Esc key to stop if cv2.waitKey(33) == 27: break # De-allocate any associated memory usage cv2.destroyAllWindows() -

Hi @Nandu, You can increment a variable each time when detect faces, is that help?

-

@salmanfaris yeah that's what i want.But i am not able to understand where i should place my variable in the above mentioned code.

-

Follow These steps

- Create a virtual enviroment and activate virtial environment

python -m venv venvActivate venv for windows using following command:

.\venv\Scripts\activateFor Ubuntu:

source venv/bin/activate- Install necessary packages on venv

pip install opencv-pythonpip install imutils- Create Folder structure as shown below in your workspace

TestPrograms | ├─ cascades │ └─ haarcascade_frontalface_default.xml ├─ detect_faces.py ├─ images │ └─ obama.jpg ├─ utilities │ └─ facedetector.py- Program for utililities/facedetector.py given below:

import cv2 class FaceDetector: def __init__(self, face_cascade_path): # Load the face detector self.face_cascade = cv2.CascadeClassifier(face_cascade_path) def detect(self, image, scale_factor=1.2, min_neighbors=3): # Detect faces in the image boxes = self.face_cascade.detectMultiScale(image, scale_factor, min_neighbors, flags=cv2.CASCADE_SCALE_IMAGE, minSize=(30,30)) # Return the bounding boxes return boxes- program on detect_faces.py

from utilities.facedetector import FaceDetector import imutils import cv2 # Define paths image_path = 'images/obama.jpg' cascade_path = 'cascades/haarcascade_frontalface_default.xml' # Load the image and convert it to greyscale image = cv2.imread(image_path) image = imutils.resize(image, 600, 600) gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) # Find faces in the image detector = FaceDetector(cascade_path) face_boxes = detector.detect(gray, 1.2, 5) print("{} face(s) found".format(len(face_boxes))) # Loop over the faces and draw a rectangle around each for (x, y, w, h) in face_boxes: cv2.rectangle(image, (x, y), (x + w, y + h), (0, 255, 0), 2) # Show the detected faces cv2.imshow("Faces", image) if(cv2.waitKey(0)): cv2.destroyAllWindows()- Links to necessary files:

Haar cascade frontal face

Obama Family Image

-

@arunksoman thankyou 🥳

-

@Nandu But I have to mention that it is not a deep learning method. It is based on Integral images(Viola-Jones algorithm), which is basically something about ML. From opencv 3.4.3 there is a DNN module. This module help us to load caffemodels, torch models as well as tensorflow models. You can find out caffemodels on the Internet in order to detect faces. Using those we can make face detection quite efficiently. If you have any doubt feel free to ask here.

-

@arunksoman how this code helps me to count faces if deeplearning isn't used.

-

@Nandu Please read the comment given above carefully and search how the viola-jones algorithm works. Sorry for misunderstanding what you say. That is why edited comment.

-

@Nandu Did you complete? excited to see.

-

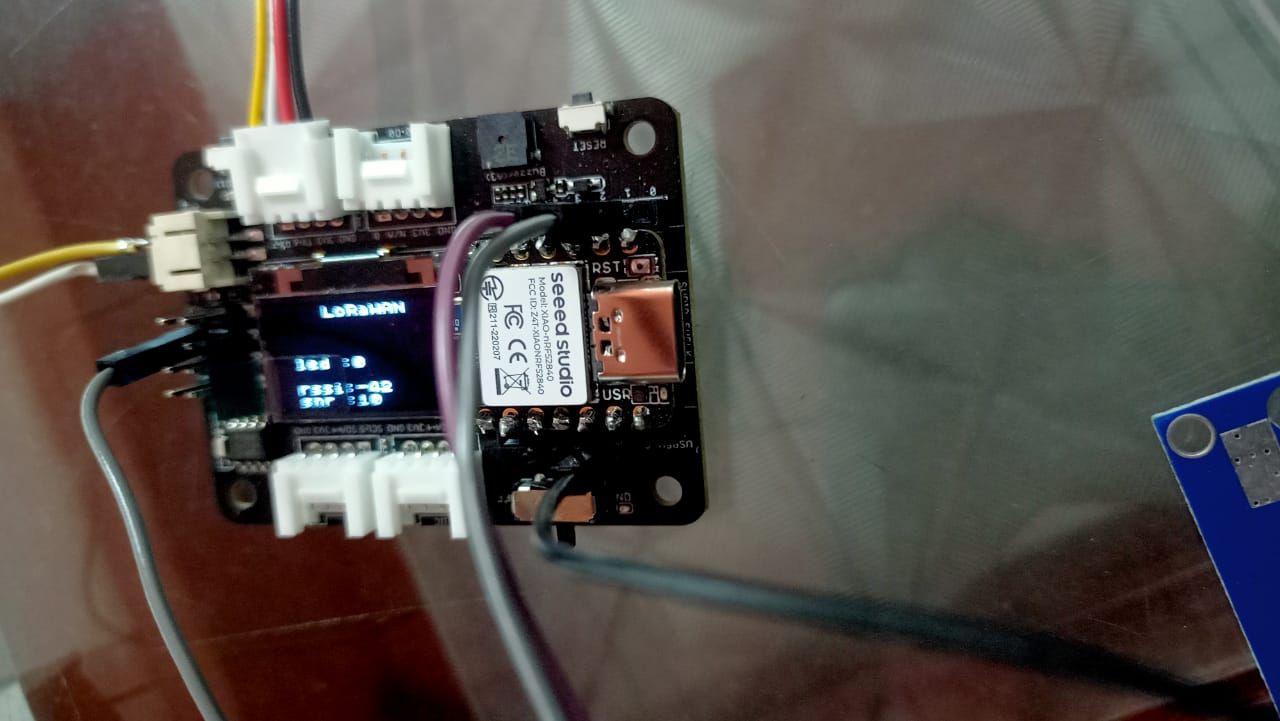

@salmanfaris in the below terminal count shows.some steps i have followed in a different manner.Thank you for helping me!