@arunksoman , any idea on this

Posts made by sreu13

-

RE: Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

@salmanfaris actually not at all solved!!..the problem is that...i do not know how to send data from raspberry pi to Google vm and get the results back from google vm to RPI4. I've researched a lot online...but cant find a proper route

-

RE: Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

@arunksoman thankyou so much...your reply really busted the stress out of me...can you help with any documentations which i could possibly go through

-

RE: Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

@arunksoman hi,..

actually at this point of time, I'm not willing to risk RPI4,....i thought of connecting the rpi4 to google cloud (already have an account with $300 credit) and proceeding with the code.

but i do not know if the below process can be executed- sending image from rpi4 to cloud

- using this cloud for running prediction

- the above two processes should be automated and image should be sent to cloud when pi boots up(VM engine can be activated whenever nessesary)

is this possible?

-

RE: Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

@arunksoman i'll try this method, but while executing swap command, will the rasbian os and the files it contains be effected??

-

RE: Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

@arunksoman tensorflow 2.0.0 version had been installed

-

RE: Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

@arunksoman i installed tensorflow from the below given link

and i installed it without entering into virtual environment

but this 10% memory issue is a serious pain

-

RE: Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

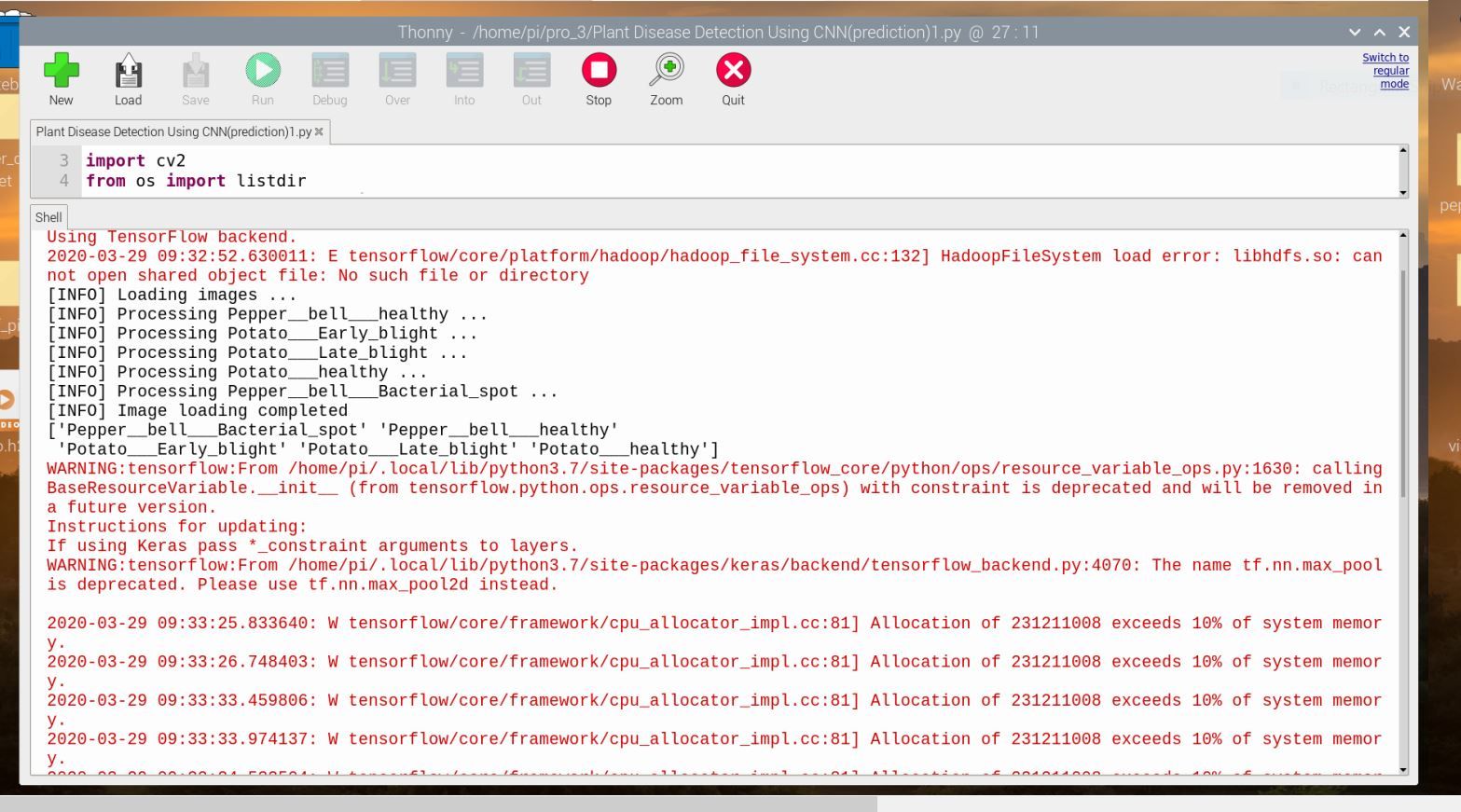

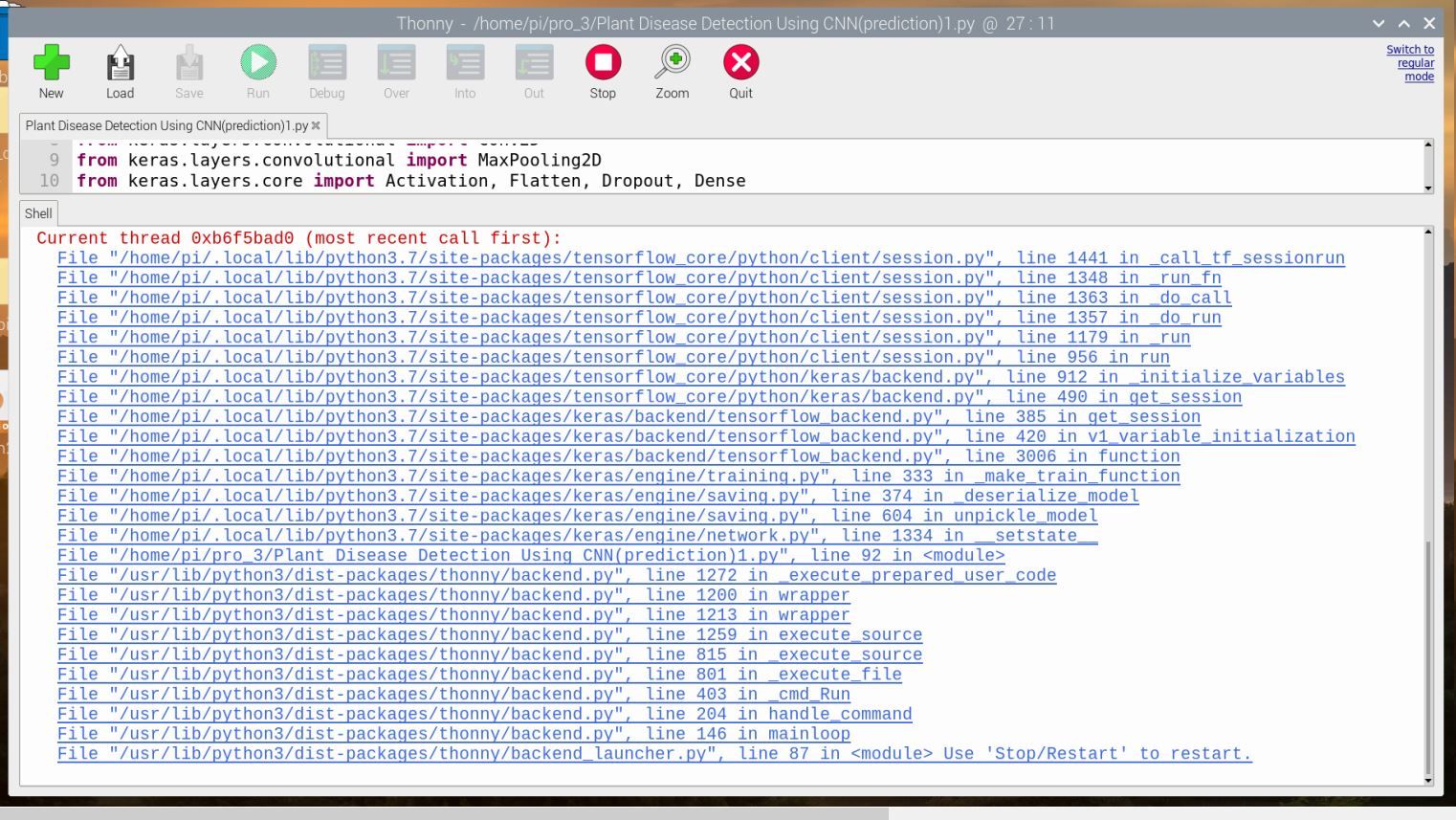

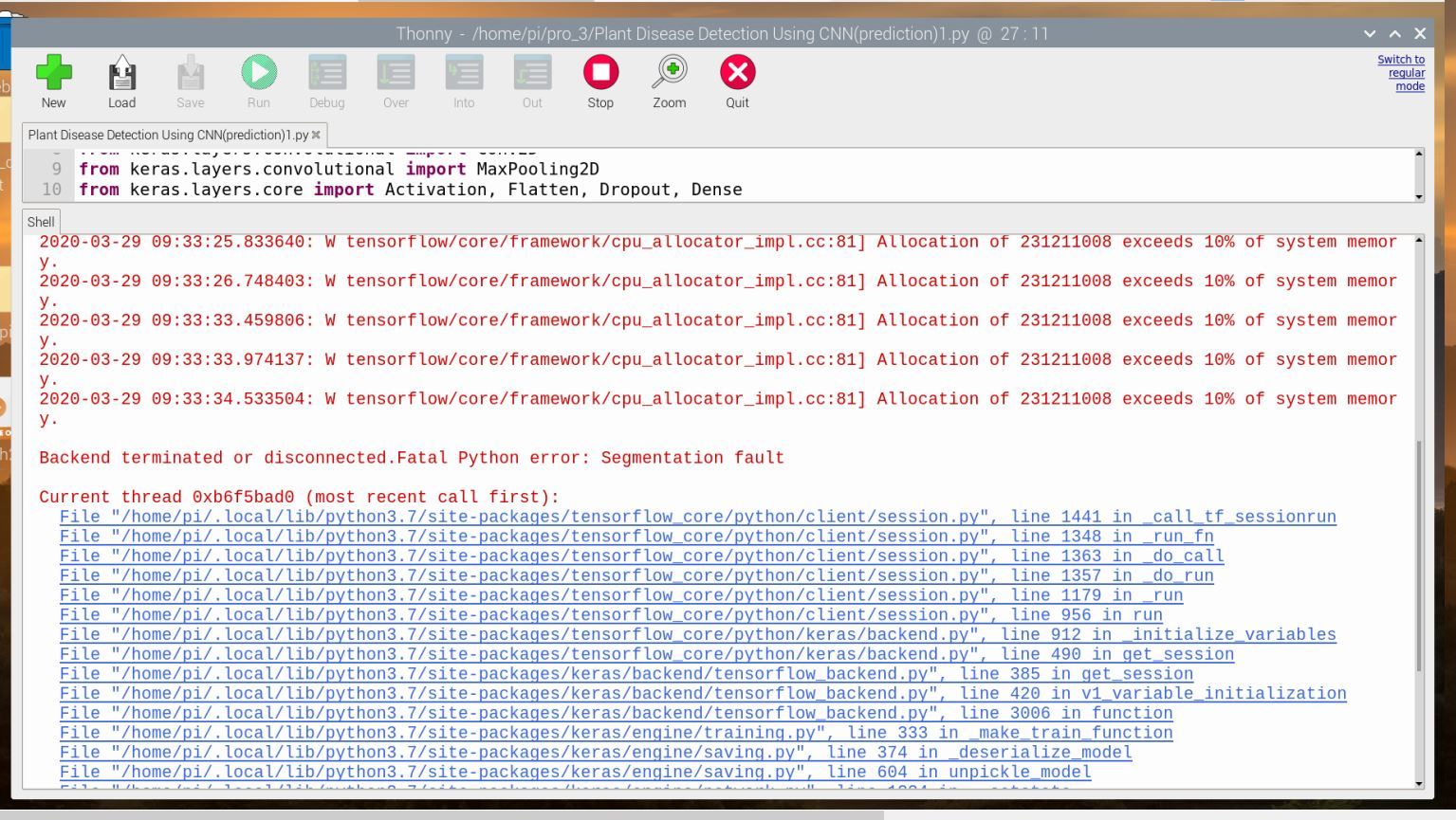

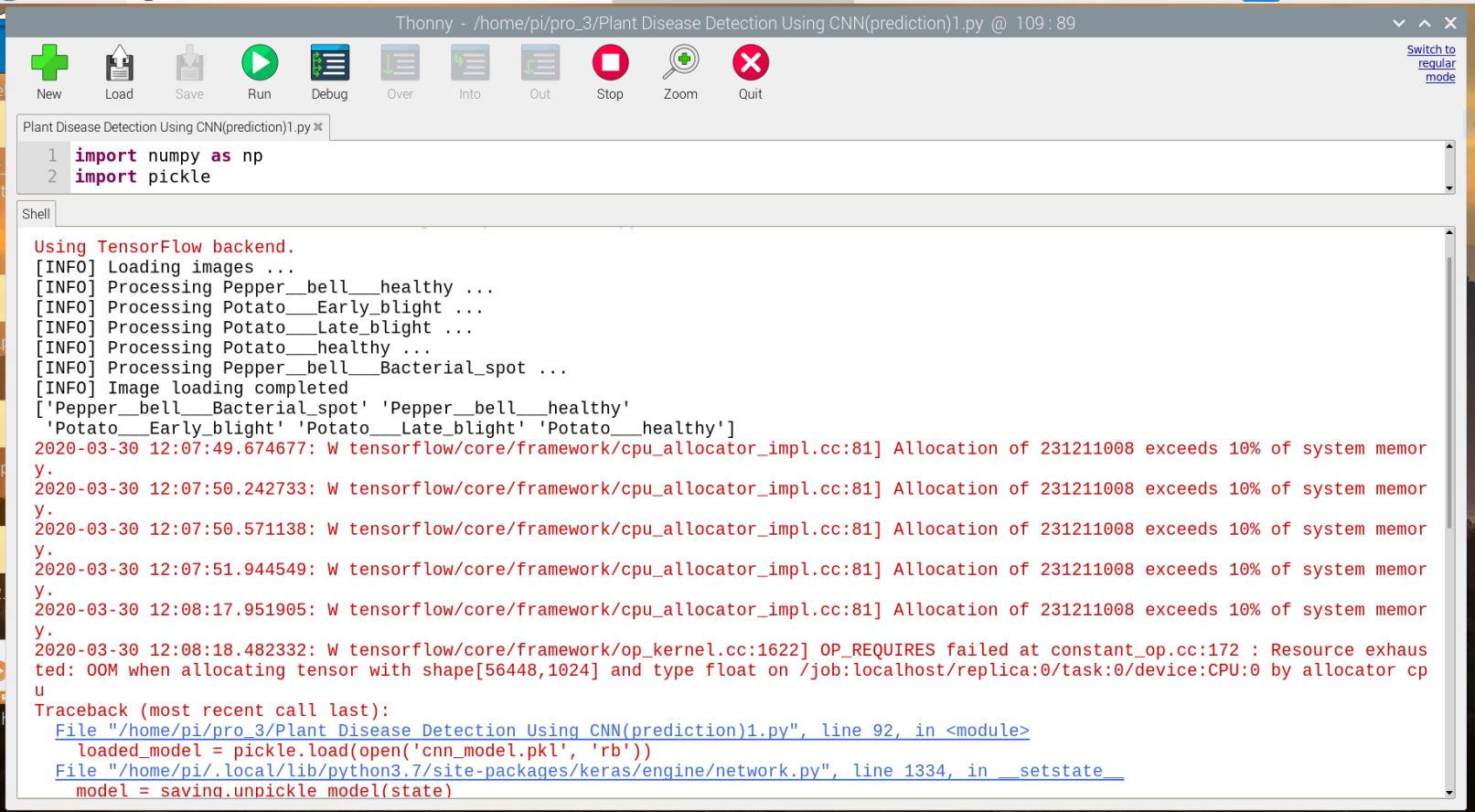

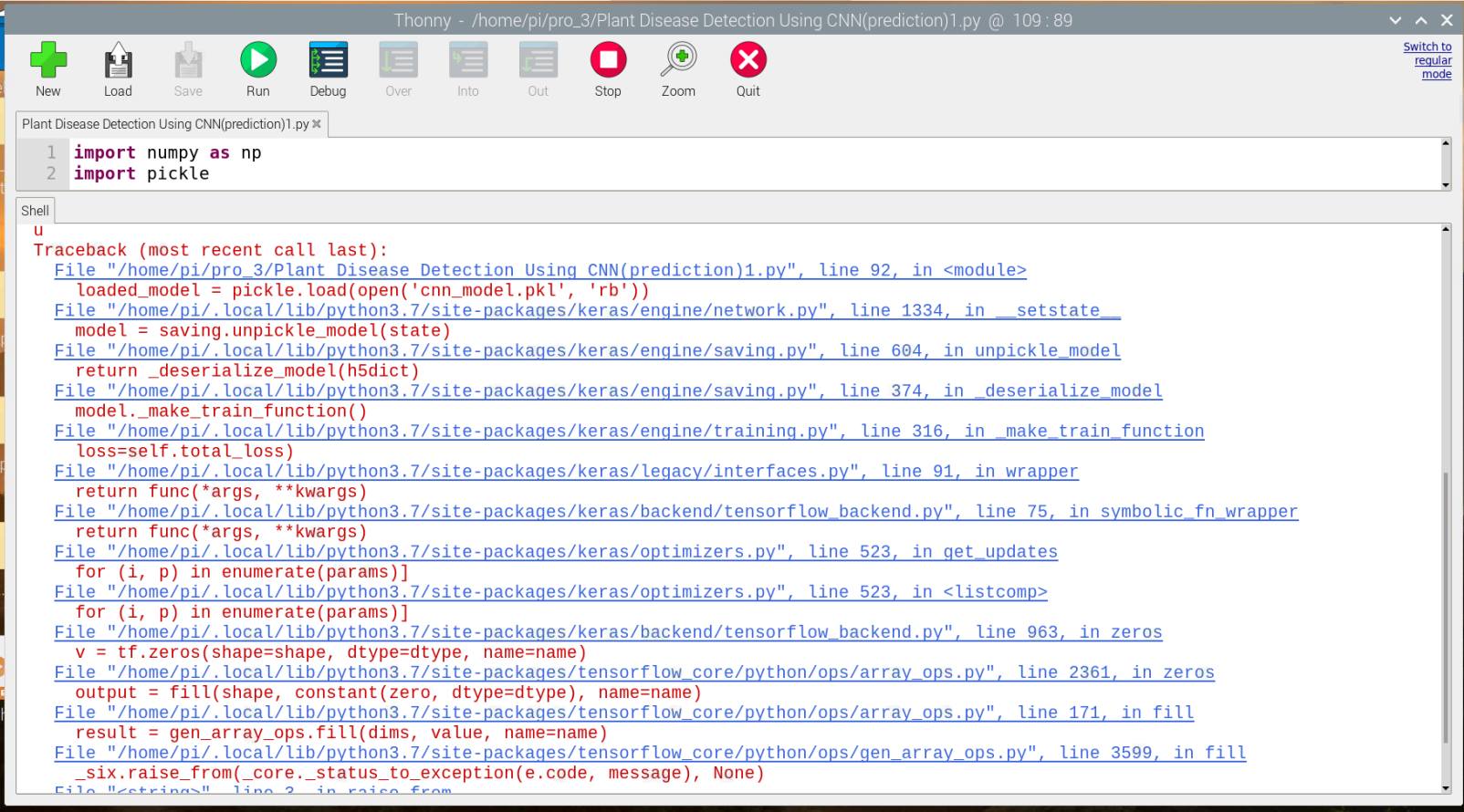

@arunksoman hi, i've run this code using python 3.7 on anaconda and it worked fine.

but as i run this on RPI 4, it showed the Hadoop error. I then updated the libraries and Hadoop error was solved..

now there is a new error

also can you take a look in the 10% memory error?...like what can be possibly done there??

-

RE: [Solved] leaf disease detection using kerasposted in General Discussion

@salmanfaris i know there was a delay in replies, i thought of finishing the project and replying here!....

i ddnt get the exact reason, but the problem was solvedwhat i did was , label binarized was working fine when i did created the training model. So i loaded my training model and deleted the script for the training procedure. i ended up with the code given below. and it worked up fine.

import numpy as np import pickle import cv2 from os import listdir from sklearn.preprocessing import LabelBinarizer from keras.models import Sequential from keras.layers.normalization import BatchNormalization from keras.layers.convolutional import Conv2D from keras.layers.convolutional import MaxPooling2D from keras.layers.core import Activation, Flatten, Dropout, Dense from keras import backend as K from keras.preprocessing.image import ImageDataGenerator from keras.optimizers import Adam from keras.preprocessing import image from keras.preprocessing.image import img_to_array from sklearn.preprocessing import MultiLabelBinarizer from sklearn.model_selection import train_test_split import matplotlib.pyplot as plt EPOCHS = 25 INIT_LR = 1e-3 BS = 32 default_image_size = tuple((256, 256)) image_size = 0 directory_root = 'PlantVillage' width=256 height=256 depth=3 #Function to convert images to array def convert_image_to_array(image_dir): try: image = cv2.imread(image_dir) if image is not None : image = cv2.resize(image, default_image_size) return img_to_array(image) else : return np.array([]) except Exception as e: print(f"Error : {e}") return None listdir(directory_root) image_list, label_list = [], [] try: print("[INFO] Loading images ...") root_dir = listdir(directory_root) for directory in root_dir : # remove .DS_Store from list if directory == ".DS_Store" : root_dir.remove(directory) for plant_folder in root_dir : plant_disease_folder_list = listdir(f"{directory_root}/{plant_folder}") for disease_folder in plant_disease_folder_list : # remove .DS_Store from list if disease_folder == ".DS_Store" : plant_disease_folder_list.remove(disease_folder) for plant_disease_folder in plant_disease_folder_list: print(f"[INFO] Processing {plant_disease_folder} ...") plant_disease_image_list = listdir(f"{directory_root}/{plant_folder}/{plant_disease_folder}") for single_plant_disease_image in plant_disease_image_list : if single_plant_disease_image == ".DS_Store" : plant_disease_image_list.remove(single_plant_disease_image) for image in plant_disease_image_list[:200]: image_directory = f"{directory_root}/{plant_folder}/{plant_disease_folder}/{image}" if image_directory.endswith(".jpg") == True or image_directory.endswith(".JPG") == True: image_list.append(convert_image_to_array(image_directory)) label_list.append(plant_disease_folder) print("[INFO] Image loading completed") except Exception as e: print(f"Error : {e}") image_size = len(image_list) #Transform Image Labels uisng Scikit Learn's LabelBinarizer label_binarizer = LabelBinarizer() image_labels = label_binarizer.fit_transform(label_list) pickle.dump(label_binarizer,open('label_transform.pkl', 'wb')) n_classes = len(label_binarizer.classes_) #Print the classes print(label_binarizer.classes_) #load saved pickle model loaded_model = pickle.load(open('cnn_model.pkl', 'rb')) model_disease=loaded_model #load plant leaf image image_dir="plantdisease/Validation_Set/Potato___Early_blight/1d301622-e359-49d5-b4ca-6837f254fd1b___RS_Early.B 6719.JPG" #convert leaf image to arrays im=convert_image_to_array(image_dir) np_image_li = np.array(im, dtype=np.float16) / 225.0 npp_image = np.expand_dims(np_image_li, axis=0) result=model_disease.predict(npp_image) print(result) #printing result itemindex = np.where(result==np.max(result)) print("probability:"+str(np.max(result))+"\n"+label_binarizer.classes_[itemindex[1][0]]) -

Error on Raspberry PI 4 while opening TensorFlow.posted in General Discussion

Hi,

I came by this error after installing TensorFlow in RPI 4

can you guys find a fix?

error on Raspberry PI 4tensorflow/core/platform/hadoop/hadoop_file_system.cc:132] HadoopFileSystem load error: libhdfs.so: cannot open shared object file: No such file or directory)