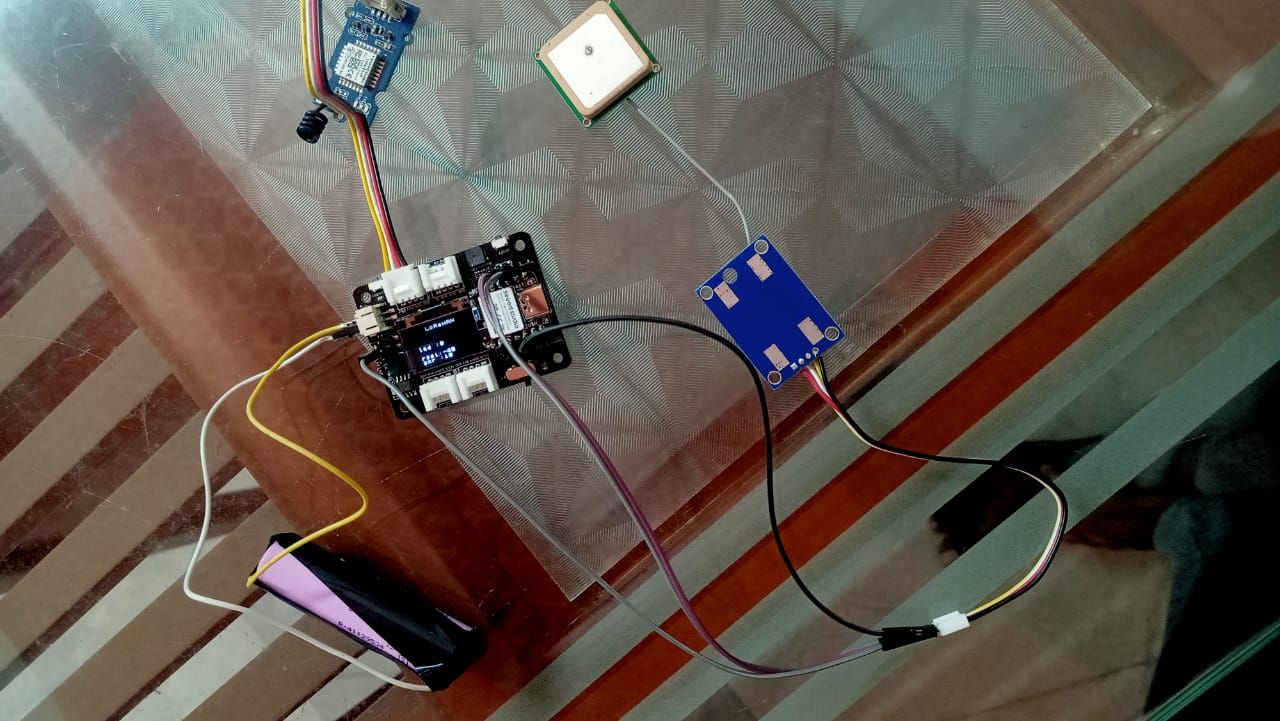

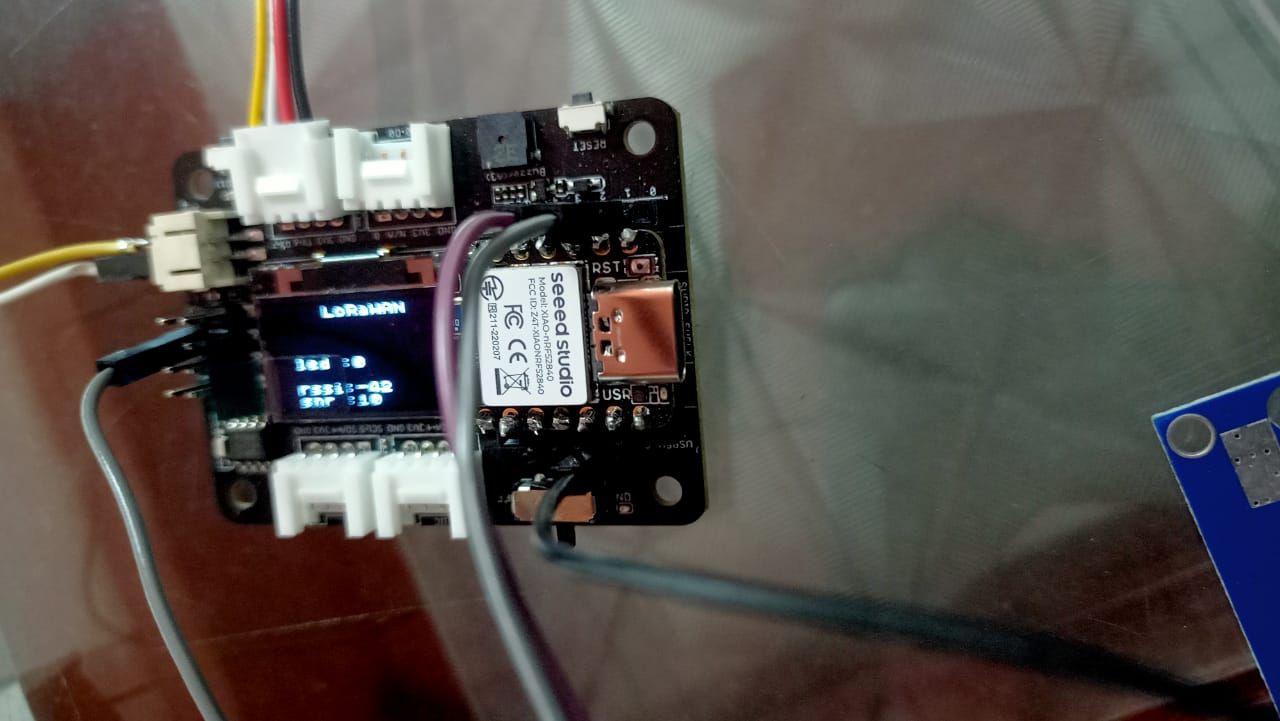

Error on Raspberry PI 4 while opening TensorFlow.

-

Hi,

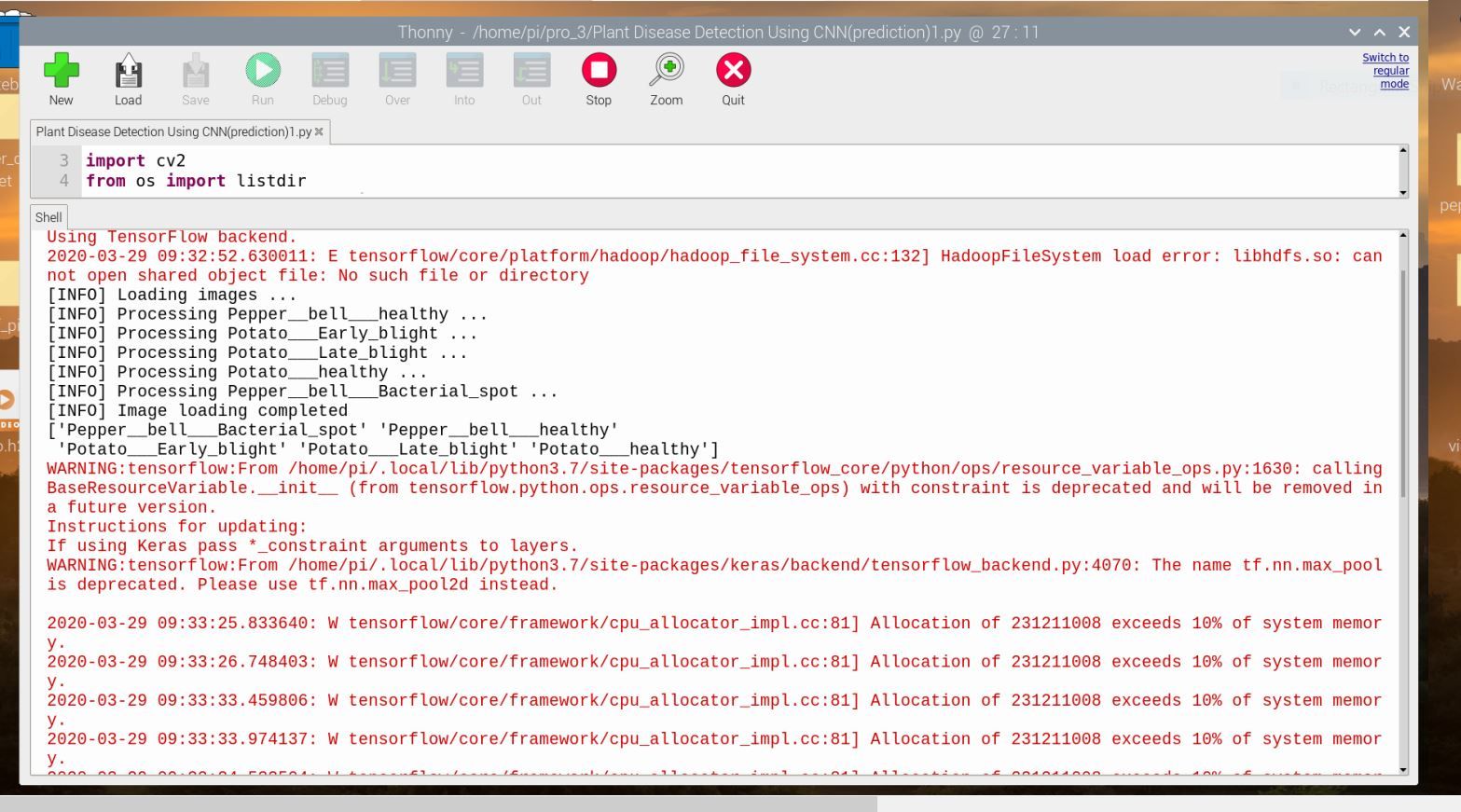

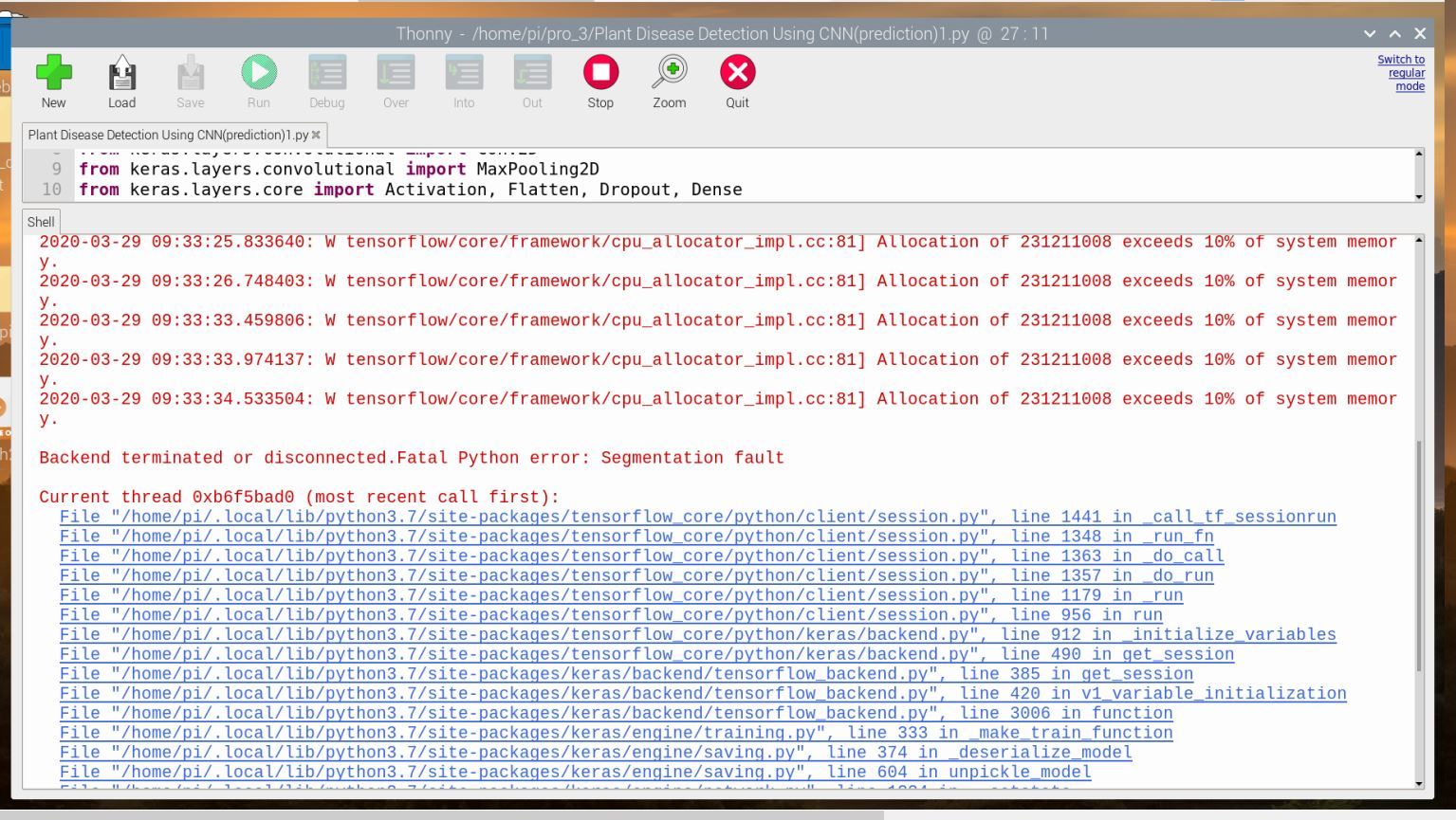

I came by this error after installing TensorFlow in RPI 4

can you guys find a fix?

error on Raspberry PI 4tensorflow/core/platform/hadoop/hadoop_file_system.cc:132] HadoopFileSystem load error: libhdfs.so: cannot open shared object file: No such file or directory)

-

Your are using a bleeding edge python software for tensorflow. I believes it is python 3.7 or python 3.8 or something. Try to downgrade your python to 3.6.5 or other compatible version by refereing documentation.

-

@arunksoman hi, i've run this code using python 3.7 on anaconda and it worked fine.

but as i run this on RPI 4, it showed the Hadoop error. I then updated the libraries and Hadoop error was solved..

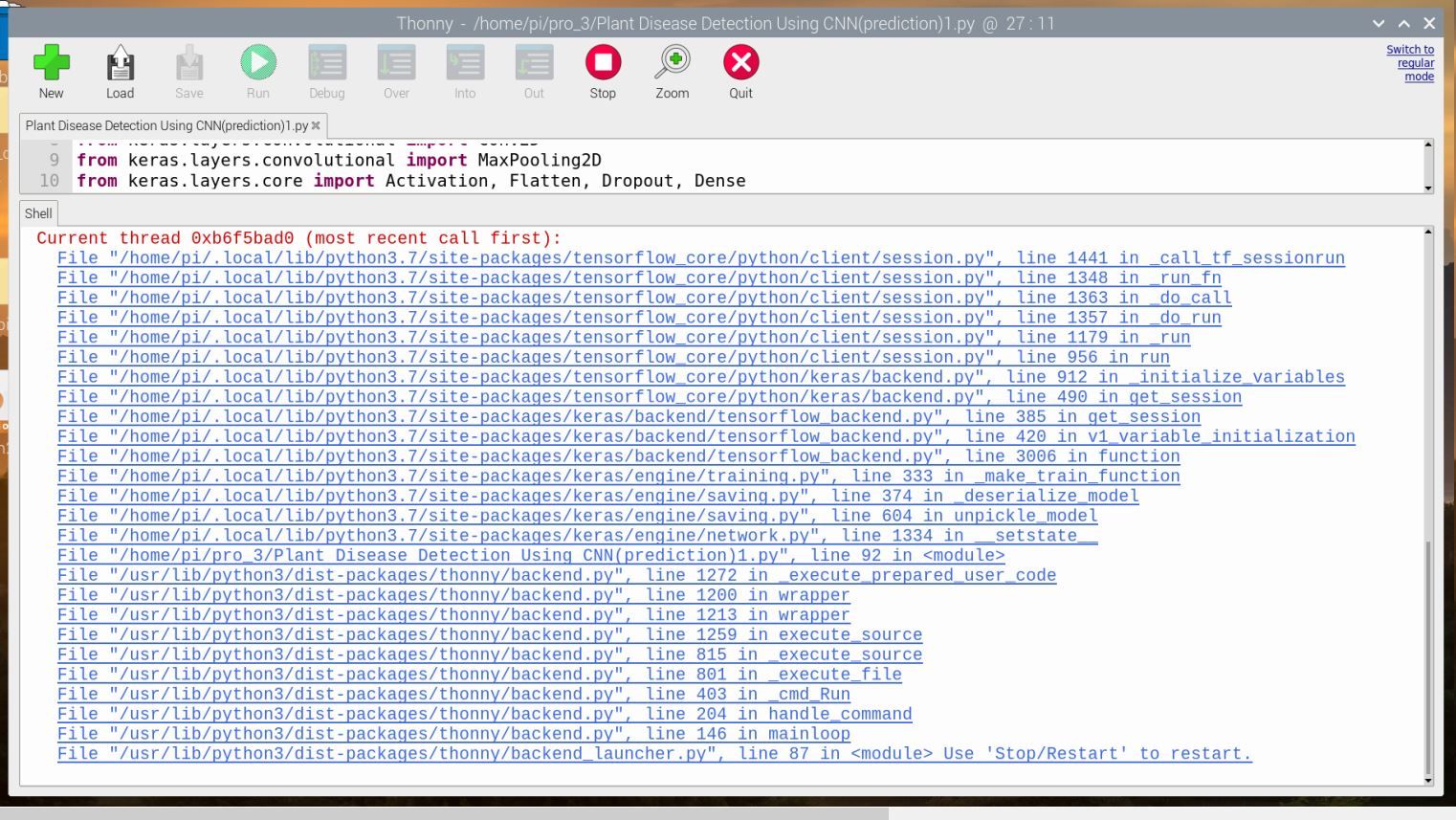

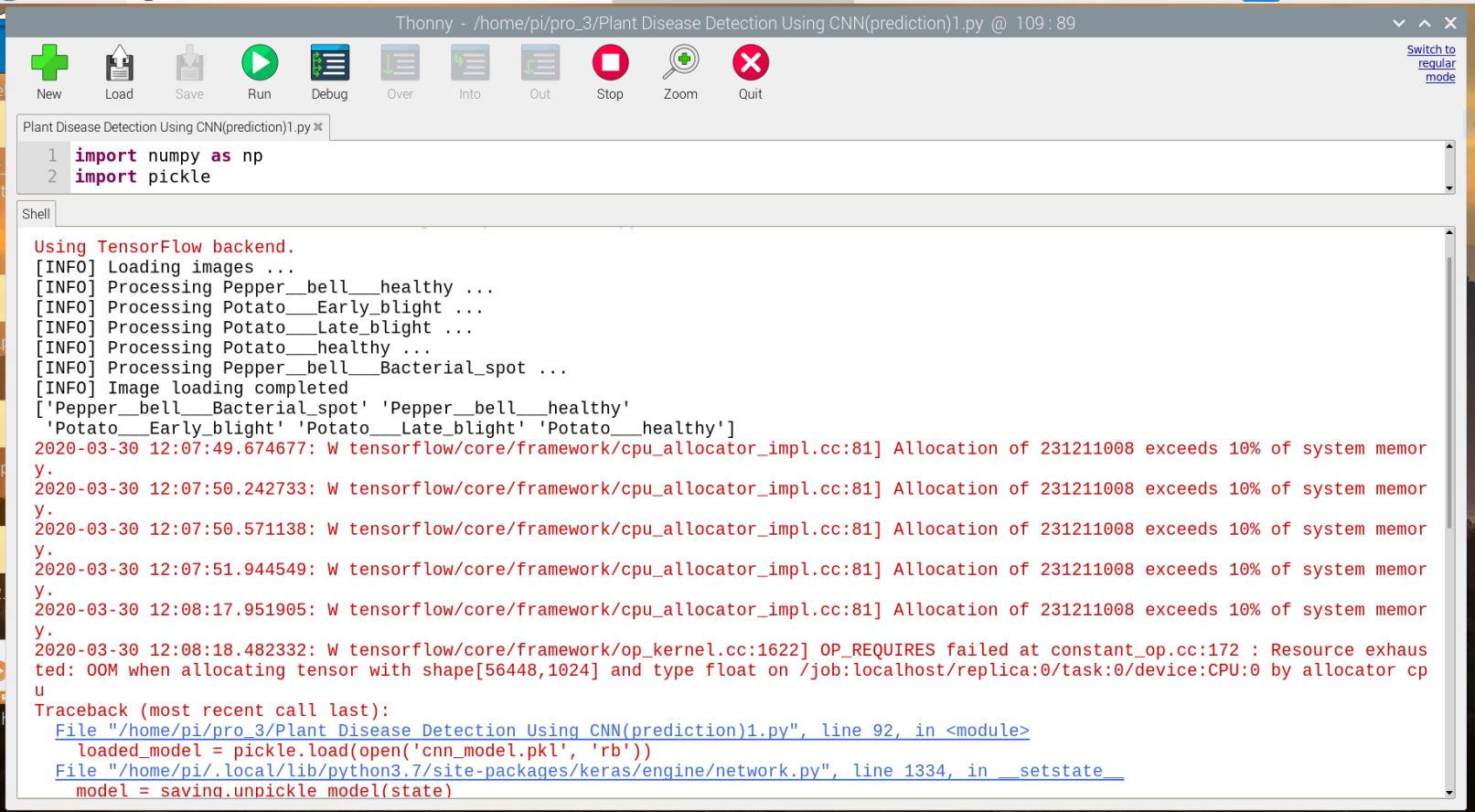

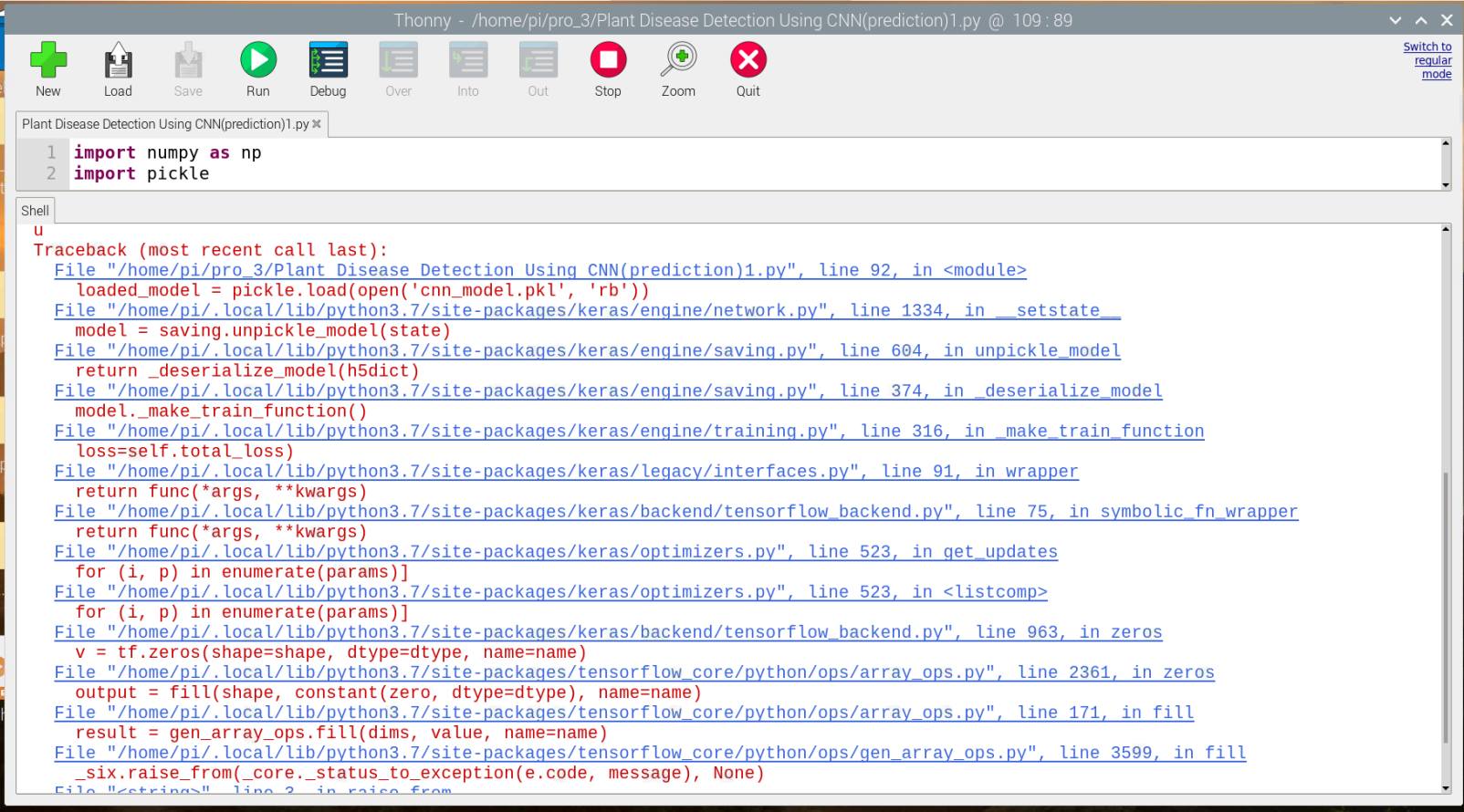

now there is a new error

also can you take a look in the 10% memory error?...like what can be possibly done there??

-

@sreu13 What is the version of tensorflow you are using? Did you read the tensorflow docs? You can't do simply

pip install tensorflow

for Python 3.7 version. -

@arunksoman i installed tensorflow from the below given link

and i installed it without entering into virtual environment

but this 10% memory issue is a serious pain

-

@arunksoman tensorflow 2.0.0 version had been installed

-

@sreu13

Ensure that you are installed tensorflow 1.x since screenshot of your code shows something like

from keras.layers.convolution import covolution2D

It is not correct in case for tensorflow 2.0 since keras api is part of tensorflow itself.Another thing is try to expand your file system. It should be do with your own risk.

sudo raspi-config- Navigate to Advanced options

- Select advanced options and hit enter(I believes tab key is useful here)

- Choose Expand File System and hit enter finish.

- Then your pi may prompt to reboot. If it didn't run. Execute

sudo reboot - Then execute following commands one by one:

$ sudo apt-get purge wolfram-engine $ sudo apt-get purge libreoffice* $ sudo apt-get clean $ sudo apt-get autoremove- Then increase swap memory by editing following file swapfile:

$ sudo nano /etc/dphys-swapfile - It will open nano editor. Navigate to variable

CONF_SWAPSIZE=100It indicates your current swap is only 100mb.

So you just have to increase by commenting down this line and increase to appropriate value something like shown below for 2GB swap:#CONF_SWAPSIZE=100 CONF_SWAPSIZE=2048- Save the file and exit nano editor.

sudo reboot

I believes if it did not helped you, you have to think about MOVIDIUS or NVIDIA Jetson nano etc.

-

@arunksoman i'll try this method, but while executing swap command, will the rasbian os and the files it contains be effected??

-

@sreu13 I edited comment please read that again. Most probably it will not effect your file system. In some scenarios it can create worse effect.

-

@arunksoman hi,..

actually at this point of time, I'm not willing to risk RPI4,....i thought of connecting the rpi4 to google cloud (already have an account with $300 credit) and proceeding with the code.

but i do not know if the below process can be executed- sending image from rpi4 to cloud

- using this cloud for running prediction

- the above two processes should be automated and image should be sent to cloud when pi boots up(VM engine can be activated whenever nessesary)

is this possible?