HID Mouse Emulation using ESP32

-

Hi all,

I am trying to make an application where I would like to emulate a mouse when I plug my device to the computer through a USB cable. I've seen applications where ESP32 pairs with the computer through Bluetooth and emulates the mouse. But I only want to use emulation through the wire.

-

ESP32 doesn't have any native USB support right?

-

@salmanfaris I guess no, it doesn't have.

-

@kowshik1729 Then I think it will be difficult, here on this video https://www.youtube.com/watch?v=po3FBdY0GS4 , bitluni's lab used CH559 USB Host controller in order to use the USB HID Services.

Another possibility is Port V-SUB to ESP32, V-USB is a software-only implementation of a low-speed USB device for Atmel’s AVR microcontrollers

I found some Github repo's that contain BLE HID

Recent Posts

-

• read more

• read more@mahesh02 From the current Arduino ESP32 board files, we don't need to install the ESP-NN separately.

But try to choose the esp32 board version 3.1.3 and check, as I also faced issues with the latest esp32 board file.

-

M• read more

Im having the same error as below, it will be really awesome if you can help me.

In file included from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_classifier_types.h:40,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_model_types.h:40,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:38,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/mahesh02-project-1_inferencing.h:49,

from C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:27:

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/model-parameters/model_metadata.h:108:2: warning: #warning 'EI_CLASSFIER_OBJECT_DETECTION_COUNT' is used for the guaranteed minimum number of objects detected. To get all objects during inference use 'bounding_boxes_count' from the 'ei_impulse_result_t' struct instead. [-Wcpp]

#warning 'EI_CLASSFIER_OBJECT_DETECTION_COUNT' is used for the guaranteed minimum number of objects detected. To get all objects during inference use 'bounding_boxes_count' from the 'ei_impulse_result_t' struct instead.

^~~~~~~

In file included from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/memory.hpp:38,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/ei_alloc.h:34,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/ei_vector.h:34,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/numpy_types.h:40,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/ei_dsp_handle.h:35,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_model_types.h:41,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:38,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/mahesh02-project-1_inferencing.h:49,

from C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:27:

c:\users\mahesh\onedrive\documents\arduino\libraries\mahesh02-project-1_inferencing\src\edge-impulse-sdk\porting\ei_classifier_porting.h:310: warning: "EI_PORTING_ARDUINO" redefined

#define EI_PORTING_ARDUINO 0c:\users\mahesh\onedrive\documents\arduino\libraries\mahesh02-project-1_inferencing\src\edge-impulse-sdk\porting\ei_classifier_porting.h:279: note: this is the location of the previous definition

#define EI_PORTING_ARDUINO 1In file included from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/speechpy.hpp:35,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_dsp.h:40,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:41,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/mahesh02-project-1_inferencing.h:49,

from C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:27:

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp: In static member function 'static int ei::speechpy::feature::mfe(ei::matrix_t*, ei::matrix_t*, ei::signal_t*, uint32_t, float, float, uint16_t, uint16_t, uint32_t, uint32_t, uint16_t)':

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp:236:52: warning: missing initializer for member 'ei::speechpy::ei_stack_frames_info::frame_ixs' [-Wmissing-field-initializers]

stack_frames_info_t stack_frame_info = { 0 };

^

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp:236:52: warning: missing initializer for member 'ei::speechpy::ei_stack_frames_info::frame_length' [-Wmissing-field-initializers]

In file included from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/speechpy.hpp:35,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_dsp.h:40,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:41,

from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/mahesh02-project-1_inferencing.h:49,

from C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:27:

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp: In static member function 'static int ei::speechpy::feature::mfe_v3(ei::matrix_t*, ei::matrix_t*, ei::signal_t*, uint32_t, float, float, uint16_t, uint16_t, uint32_t, uint32_t, uint16_t)':

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp:436:52: warning: missing initializer for member 'ei::speechpy::ei_stack_frames_info::frame_ixs' [-Wmissing-field-initializers]

stack_frames_info_t stack_frame_info = { 0 };

^

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp:436:52: warning: missing initializer for member 'ei::speechpy::ei_stack_frames_info::frame_length' [-Wmissing-field-initializers]

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp: In static member function 'static int ei::speechpy::feature::spectrogram(ei::matrix_t*, ei::signal_t*, float, float, float, uint16_t, uint16_t)':

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp:576:52: warning: missing initializer for member 'ei::speechpy::ei_stack_frames_info::frame_ixs' [-Wmissing-field-initializers]

stack_frames_info_t stack_frame_info = { 0 };

^

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/dsp/speechpy/feature.hpp:576:52: warning: missing initializer for member 'ei::speechpy::ei_stack_frames_info::frame_length' [-Wmissing-field-initializers]

In file included from C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/mahesh02-project-1_inferencing.h:49,

from C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:27:

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h: In function 'EI_IMPULSE_ERROR {anonymous}::process_impulse(ei_impulse_handle_t*, ei::signal_t*, ei_impulse_result_t*, bool)':

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:307:23: error: format '%lu' expects argument of type 'long unsigned int', but argument 2 has type 'size_t' {aka 'unsigned int'} [-Werror=format=]

ei_printf("ERR: Out of memory, can't allocate matrix_ptrs[%lu]\n", ix);

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ ~~

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:312:23: error: format '%lu' expects argument of type 'long unsigned int', but argument 2 has type 'size_t' {aka 'unsigned int'} [-Werror=format=]

ei_printf("ERR: Out of memory, can't allocate matrix_ptrs[%lu]\n", ix);

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ ~~

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h: In function 'EI_IMPULSE_ERROR {anonymous}::process_impulse_continuous(ei_impulse_handle_t*, ei::signal_t*, ei_impulse_result_t*, bool)':

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:558:27: error: format '%lu' expects argument of type 'long unsigned int', but argument 2 has type 'size_t' {aka 'unsigned int'} [-Werror=format=]

ei_printf("ERR: Out of memory, can't allocate matrix_ptrs[%lu]\n", ix);

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ ~~

C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing\src/edge-impulse-sdk/classifier/ei_run_classifier.h:563:27: error: format '%lu' expects argument of type 'long unsigned int', but argument 2 has type 'size_t' {aka 'unsigned int'} [-Werror=format=]

ei_printf("ERR: Out of memory, can't allocate matrix_ptrs[%lu]\n", ix);

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ ~~

C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino: In function 'void loop()':

C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:181:38: warning: missing initializer for member 'ei_impulse_result_t::bounding_boxes_count' [-Wmissing-field-initializers]

ei_impulse_result_t result = { 0 };

^

C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:181:38: warning: missing initializer for member 'ei_impulse_result_t::classification' [-Wmissing-field-initializers]

C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:181:38: warning: missing initializer for member 'ei_impulse_result_t::anomaly' [-Wmissing-field-initializers]

C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:181:38: warning: missing initializer for member 'ei_impulse_result_t::timing' [-Wmissing-field-initializers]

C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:181:38: warning: missing initializer for member 'ei_impulse_result_t::copy_output' [-Wmissing-field-initializers]

C:\Users\Mahesh\AppData\Local\Temp\arduino_modified_sketch_680639\esp32_camera.ino:181:38: warning: missing initializer for member 'ei_impulse_result_t::postprocessed_output' [-Wmissing-field-initializers]

cc1plus.exe: some warnings being treated as errors

Using library mahesh02-project-1_inferencing at version 1.0.1 in folder: C:\Users\Mahesh\OneDrive\Documents\Arduino\libraries\mahesh02-project-1_inferencing

exit status 1

Error compiling for board AI Thinker ESP32-CAM. -

• read more

• read moreThis also happened to me when I was given a task from my company, So what I can recommend is:

Ensure that the correct board, "AI Thinker ESP32-CAM," is selected in the Tools menu of your Arduino IDE.

If the board is not listed, you may need to install it through the Board Manager. Search for "ESP32" and install the latest version.

Ensure that necessary libraries like ESP32-CAM, WiFi, and HTTPClient are installed. You can install them through the Library Manager.Carefully check your code for typos, missing semicolons, or incorrect syntax.

Verify that you're using the correct function names and parameters. -

• read more

• read moreHi @PumpedMedusa, The rage is depends on the Antenna and how much TX power you have.

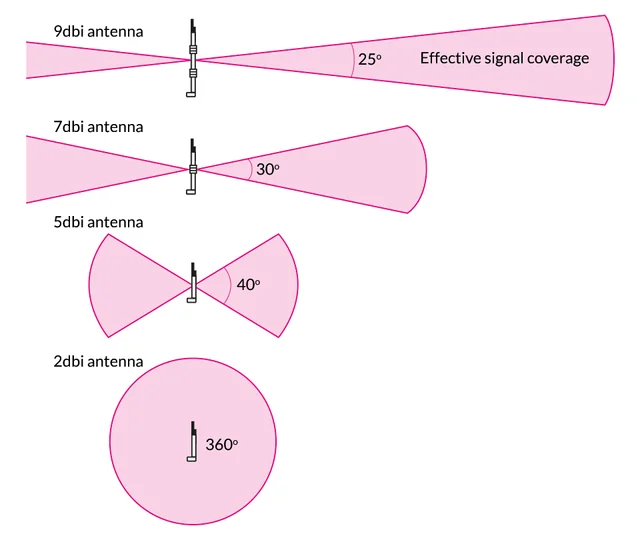

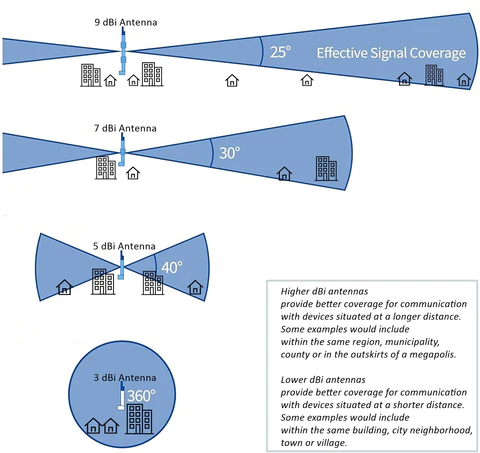

To choose antenna, first you need figure out - where your nodes will be, take a look at the below image. The antenna act like a "torch" if you have more dbi that mean it's focus will be increase and it can reach more distance but the angle it have it less, like a laser.

also, obstacle such as buildings, tress and mountains will affect the signal and deplete it.

So, answer for you question - What is the best LoRa module to use for long distance network? - It's really depends on where you planning to put the nodes and how high you putting the gateway. Let me know know your thoughts.

-

• read more

• read more@Parves-DOMINO Did you able to solve the issue?